In today’s data-driven world, machine learning (ML) stands at the forefront of technological innovation, transforming industries such as healthcare, finance, marketing, and more. Professionals are keen to understand and implement machine learning algorithms to harness the power of data-driven decision-making.

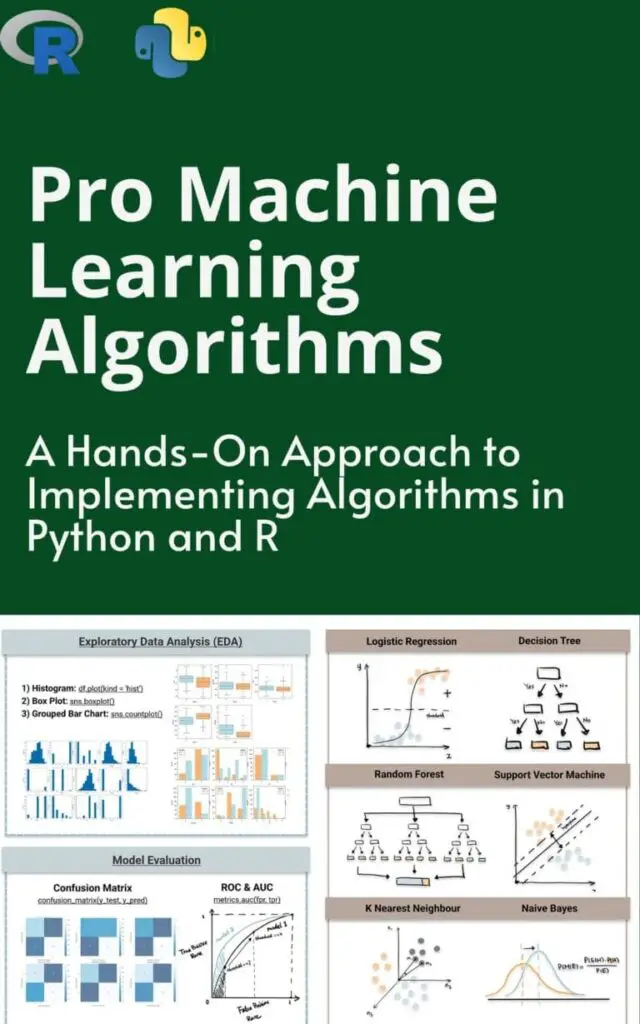

This comprehensive guide delves into the essence of machine learning algorithms, explores their various types, and offers a hands-on approach to their implementation.

What Are Machine Learning Algorithms?

Table of Contents

ToggleMachine learning algorithms are computational techniques that enable systems to learn patterns from data and make informed predictions or decisions without explicit programming. By processing vast amounts of data, these algorithms iteratively improve their performance, facilitating the automation of complex tasks and the extraction of actionable insights from large datasets.

Types of Machine Learning Algorithms

Machine learning algorithms are broadly categorized based on their learning paradigms and the nature of the data they process. The primary categories include:

1. Supervised Learning Algorithms

Supervised learning involves training models on labeled datasets, where each input is paired with the correct output. The model learns to map inputs to outputs, making it adept at tasks like classification and regression.

Common Supervised Learning Algorithms:

- Linear Regression: Utilized for predicting continuous values, such as forecasting sales figures.

- Logistic Regression: Employed for binary classification problems, like determining whether an email is spam or not.

- Decision Trees: Models that split data into branches to represent decisions, aiding in scenarios like customer segmentation.

- Random Forests: An ensemble of decision trees that enhances accuracy by reducing overfitting.

- Support Vector Machines (SVM): Effective for high-dimensional data classification, such as image recognition tasks.

2. Unsupervised Learning Algorithms

Unsupervised learning deals with unlabeled data, aiming to uncover hidden patterns or intrinsic structures without external guidance.

Common Unsupervised Learning Algorithms:

- K-Means Clustering: Partitions data into K distinct clusters, useful in market segmentation.

- Hierarchical Clustering: Builds a hierarchy of clusters, aiding in the visualization of data relationships.

- Principal Component Analysis (PCA): Reduces data dimensionality while preserving variance, essential in data compression.

- Autoencoders: Neural networks designed for data compression and reconstruction, often used in anomaly detection.

3. Reinforcement Learning Algorithms

In reinforcement learning, an agent acquires decision-making capabilities through continuous interaction with its environment. The agent receives feedback in the form of rewards or penalties based on its actions, allowing it to learn the optimal behavior to achieve its goals over time. This approach is particularly beneficial in dynamic environments where the agent must adapt to changes.

Common Reinforcement Learning Algorithms:

- Q-Learning: A value-based method for finding the optimal action-selection policy in decision-making tasks.

- Deep Q Networks (DQN): Combines Q-Learning with deep neural networks, enhancing capabilities in complex environments.

- Policy Gradient Methods: Directly optimize the policy that the agent follows, useful in continuous action spaces.

4. Deep Learning Algorithms

Deep learning is a specialized branch of machine learning, employs artificial neural networks composed of multiple layers to emulate the intricate decision-making processes of the human brain. It excels in handling unstructured data such as images, audio, and text.

Common Deep Learning Architectures:

- Convolutional Neural Networks (CNNs): Specialized for processing grid-like data structures, commonly used in image and video recognition tasks.

- Recurrent Neural Networks (RNNs): Designed for sequential data, making them ideal for time-series analysis and natural language processing.

- Generative Adversarial Networks (GANs): Consist of two networks—a generator and a discriminator—that compete to produce realistic synthetic data, widely used in image generation.

5. Ensemble Learning Algorithms

Ensemble learning methods combine the decisions from multiple models to improve overall performance. By aggregating the strengths of various models, ensemble methods often achieve better predictive accuracy than individual models.

Common Ensemble Learning Techniques:

- Bagging (Bootstrap Aggregating): Builds multiple models from different subsets of the training data and aggregates their predictions to reduce variance.

- Boosting: Combines weak learners sequentially, each focusing on the errors of the previous model, to create a strong composite model that reduces bias.

- Stacking: Integrates multiple classification or regression models via a meta-classifier or meta-regressor, enhancing predictive performance.

Evaluating Machine Learning Models

Assessing the performance of machine learning models is crucial to ensure their effectiveness and reliability. Evaluation involves using various metrics and validation techniques to measure how well a model generalizes to unseen data.

Key Evaluation Metrics:

- Accuracy: The proportion of correctly predicted instances out of the total instances, indicating overall correctness.

- Precision: The ratio of true positive predictions to the total predicted positives, reflecting the accuracy of positive predictions.

- Recall (Sensitivity): The ratio of true positive predictions to all actual positives, measuring the model’s ability to identify positive instances.

- F1 Score: The harmonic mean of precision and recall, providing a balanced metric when classes are imbalanced.

- Mean Squared Error (MSE): The average squared difference between predicted and actual values, used for regression tasks to measure prediction accuracy.

Validation Techniques:

- Train-Test Split: Dividing the dataset into separate training and testing sets to evaluate model performance on unseen data.

- Cross-Validation: Partitioning the data into multiple folds and training the model on each fold iteratively to ensure robustness.

- Bootstrapping: Sampling with replacement to create multiple training sets, allowing estimation of the model’s accuracy and variance.

Choosing the Right Algorithm

Appropriate machine learning algorithm selection is most important for the success of any project. Consider the following factors:

- Nature of the Problem: Determine if the task is related to classification, regression, clustering, or reinforcement learning.

- Data Characteristics: Assess the size, quality, and type of data available.

- Interpretability Requirements: Some applications require models that are easy to interpret, while others prioritize accuracy.

- Computational Resources: Complex models may require significant computational power and time.

Experimentation and iterative testing are often necessary to identify the most effective algorithm for a given problem.

Conclusion

Understanding and implementing machine learning algorithms is an essential skill in today’s data-driven world. By comprehending the various types of algorithms and their appropriate applications, professionals can develop models that provide actionable insights and drive innovation across industries. A hands-on approach, combined with continuous learning and adaptation, will enable practitioners to effectively tackle complex challenges and contribute to the advancement of artificial intelligence.