Fundamentals of Deep Learning

Would you like to know the fundamentals of Deep Learning? If yes, then Congratulations! You are in the right place. By the end of this article, you will clearly understand the basics of Deep Learning. So, for that, read the full article.

Let’s get started!

Hello & Welcome!

Let’s start with the definition of DL-

Table of Contents

ToggleWhat is Deep Learning

Deep Learning is a specific field within Machine Learning. And Machine Learning is a field within Artificial Intelligence. Deep Learning works in a way similar to how humans understand and make decisions.

Deep Learning (DL) automatically discovers useful patterns and features within data. This data can exist in many formats, such as images, text, videos, or audio.

DL can categorize images into different classes. I will explain it in the following section.

Deep Learning is able to process both structured and unstructured data.

To learn more about Deep Learning, read this article: What is Deep Learning and Why is it Popular?

Now, we will understand the purpose of using Deep Learning.

Why DL

Deep Learning is mainly used when the data size is too big. This means DL works very well when you have a large amount of data.

This feature makes DL very popular.

Another benefit of using machine learning is that it can extract important features automatically. This means you do not have to enter all the features by yourself. DL automatically extracts all features.

Another key reason for choosing Deep Learning is its ability to solve complicated problems. Deep Learning is capable of solving complicated problems from real life.

So, these are the three key reasons that make deep learning popular.

Now, let’s see how DL works.

How does Deep Learning work?

Related Article

Top 10 Books to Start with Neural Networks and Deep Learning

DL uses an Artificial Neural network. Artificial Neural Networks work like the human brain.

The human Brain consists of neurons. These neurons are connected to each other. A neuron from the human brain looks like this.

This image shows a neuron with its branches and tail.

Do you think?

Have you noticed that your hand pulls back quickly when you touch something hot?

This is the procedure that happens inside you.

When you touch something hot, your skin automatically sends a signal to the neuron. The neuron signals you to take your hand away. So that’s all about the Human Brain.

Artificial Neural Network

In the same way, an Artificial Neural Network works.

In an Artificial Neural Network, there are also neurons. This works in the same manner.

If you are interested in exploring neurons in Artificial Intelligence in more detail.

Read this article. Artificial Neural Network: What is a Neuron? Ultimate Guide.

All your doubts regarding Neurons and Layers in ANN will be explained here.

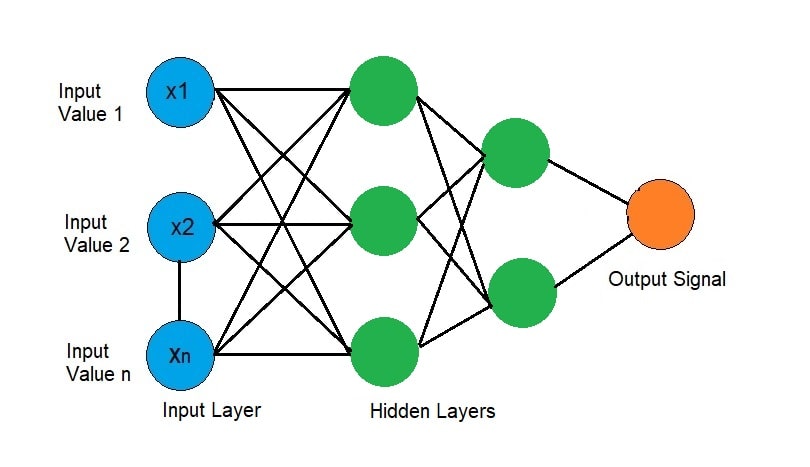

An Artificial Neural Network has three layers-

1. Input Layer.

2. Hidden Layer.

3. Output Layer.

Let’s see in this image-

Every circle you notice here is a neuron. Neurons in the network are all connected to each other.

Data is passed to the input layer. Then the data in the input layer goes to the hidden layer. The hidden layer performs certain operations. And pass the result to the output layer.

This is how a simple Artificial Neural Network works.

But,

In these three layers, various computations are performed.

Let’s see concisely.

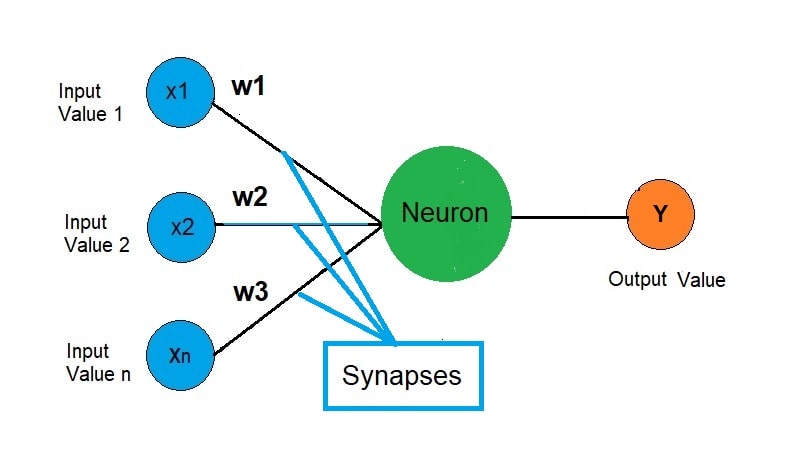

One Neuron is connected with others through synapses. Synapses are the connecting lines between two neurons.

Each synapse has some associated weights. Let’s understand with the help of this image.

Activation Function

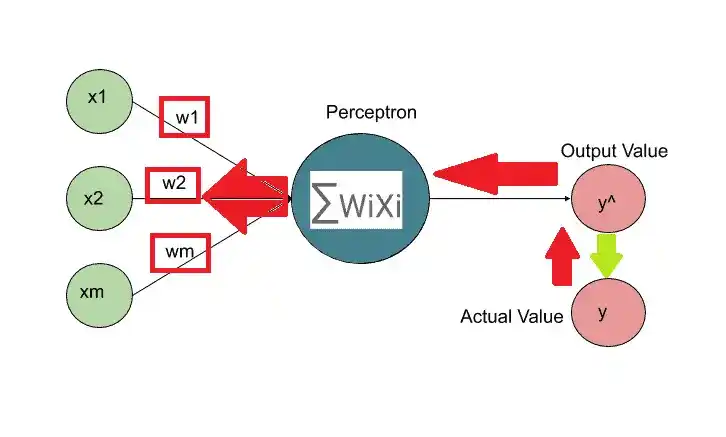

So, with these weights, two operations are performed.

1. Weighted Sum.

2. Activation Function.

The first step is the weighted sum. All weights are multiplied by input values. In this case, the input value refers to the data that is provided to the input layer.

Weighted sum looks something like that-

[ x1.w1+x2.w2+x3.w3+………………..Xn.Wn]First, calculate the weighted sum, then apply the activation function. The neuron decides if the value should go to the next layer or stop it.

The activation function plays an important role. The activation function generates a result based on the given input.

Both the hidden layer and the output layer performed the activation function.

The most popular and used Activation functions are-

1. Threshold Activation Function.

2. Sigmoid Function.

3. Rectifier Function.

4. Hyperbolic Tangent(tan h)

5. Linear Function.

Want to learn more about these activation functions? Read this article: Activation

Function and Its Types - Which one to select. This article will help you understand

Activation Functions.

The activation function generates the output according to the input given.

Gradient Descent

This output is the predicted output. Now compare the predicted output with the actual output. And check the difference between the two outputs.

The cost function can be defined as the difference between the prediction and the actual output.

The formula for calculating the cost function is-

cost function= 1/2 square(y – y^)

Where y is the actual output. And y^ is the predicted output.

Our goal is to minimize the cost function.

So, once the Neural Network calculates the cost function. It backpropagates to update the weights. This picture makes it easy to understand.

So, the neural network updates the weights. And again, the Neural network predicts the output. This predicted output is matched with the actual output.

By repeating this process, the cost function achieves its minimum value.

Now, you may have a question in mind: how can the cost function be reduced?

Right?

The answer is that we use the Gradient Descent method to reduce the cost function.

The goal of Gradient Descent is to make the cost function as small as it can be.

For more details on Gradient Descent. Read this article - Easy Explanation of

Gradient Descent in Neural Networks. This explore how Gradient Descent

reduces the cost function.

But,

A convex cost function makes Gradient Descent simple and reliable.

But What?

When is your cost function not convex?

So, for that, Stochastic Gradient Descent is used.

What is the Convex cost function? What is Stochastic Gradient Descent? And how does stochastic gradient descent work? For that, read this article. Stochastic Gradient Descent- A Super Easy Complete Guide! Learn the basics of Stochastic Gradient Descent here.

So, that’s all about how DL works.

By now, you understand the basic working of deep learning.

How neural network work on Real-world Problems? Read this article - How does Neural Network Work? A step by step Guide. I have discussed a real-life example here. An example of Property Evaluation.

Now, let’s see DL Algorithms.

Algorithms of Deep Learning

Here is a list of the top top-most used DL Algorithms:

1. Feedforward Neural Network.

2. Backpropagation.

3. Convolutional Neural Network.

4. Recurrent Neural Network.

5. Generative Adversarial Networks (GAN).

Learn most used Algorithms in detail - Mostly used DL Algorithms List,

You Need to Know.

Here, I will give a simple explanation of Convolutional Neural Networks (CNN) and Generative Adversarial Networks (GAN).

Let’s start with a Convolutional Neural Network

Convolutional Neural Network

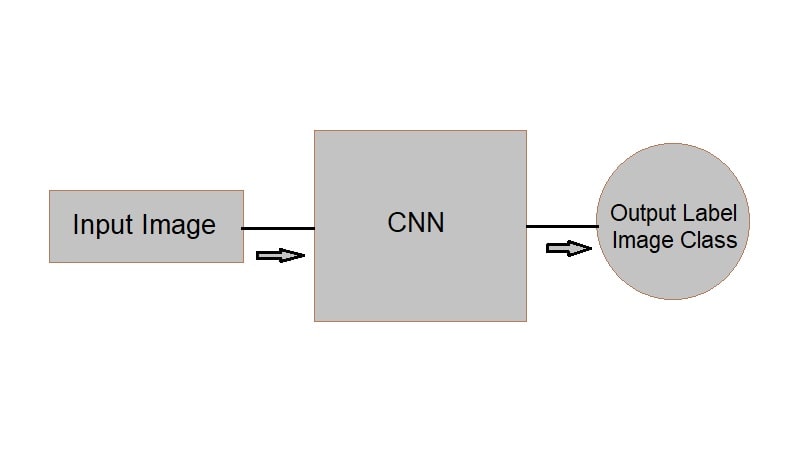

The main uses of CNNs are in Image Recognition and Natural Language Processing. This is a key algorithm that plays a key role in deep learning.

A Convolutional Neural Network (CNN) takes an image, finds key features, and predicts what it shows.

Suppose, when you see a lion, your brain looks at specific features to recognize it. These features could be the lion’s ears, eyes, or something else. Your brain recognizes these features and signals that this is a lion.

Similarly, a Convolutional Neural Network looks at an image and recognizes it by its features.

This image explains how a Convolutional Neural Network works.

A CNN analyzes an image, identifies features, and classifies the image.

There are various steps in a Convolutional Neural Network. These steps are-

1. Convolution Operation.

2. ReLU Layer.

3. Pooling.

4. Flattening.

5. Full Connection.

Here, I will not discuss it in detail.

If you want to know the step by step procedure of CNN then read this article: Basics of Convolutional Neural Network – Everything You need to know.

Next, we will look at a deep learning algorithm called Generative Adversarial Networks (GAN).

Generative Adversarial Networks (GAN)

A Generative Adversarial Network (GAN) is an advanced method used in deep learning. It is unsupervised learning.

GANs use two deep learning models. These are called the Generator and the Discriminator.

They both compete with each other. This competition makes GAN work better and more precisely.

GANs are used to create content like images, videos, and audio.

GAN is used in various fields. Some most used fields are-

1. Music.

2. Deepfake detection.

3. Text to Image Generation.

4. Medicine.

5. Image-to-Image Translation.

6. Enhance the resolution of an Image.

7. Interactive Image Generation.

CNN looks at an image and finds important features of it. A GAN studies image features and then creates new images from them.

To learn more details about Generative Adversarial Network (GAN) like how it works? How to perform training in GAN? Read this article: Understanding Generative Adversarial Networks: A Simple Explanation

Now, let’s move into the next section.

Deep Learning vs Machine Learning

DL is taking over Machine Learning. And the reason is its features.

Deep learning and machine learning have three basic differences.

1. DL gives excellent results on large datasets. But machine learning algorithms cannot handle very large datasets. Machine Learning can work only on small datasets. This is the limitation of Machine Learning. But Deep learning can process big datasets without difficulty.

2. To train a Machine Learning model, you need to input all the features manually. But DL automatically extracts all the features. This makes DL much powerful than Machine Learning. Manual feeding takes a lot of time, especially with medium to large datasets.

3. Machine Learning can’t solve complex real-world problems. But Deep Learning Algorithms make solving real problems easy. This is one of the reasons to choose deep learning instead of machine learning.

I hope you now understand the difference clearly.

Want to learn how Deep Learning is different from a Neural Network? Read this article to explore the main differences. – Deep Learning vs Neural Network Explained

Now, let’s move into the next section.

Example of Deep Learning

DL is used in almost every field nowadays. But the most used areas of DL are-

1. Self-driving cars – Now, Cars can drive on their own without drivers. How amazing it is. And that happens because of DL. In a self-driving car, deep learning is used to observe and understand its surroundings. To identify the pedestrian, traffic lights, roads, and buildings as objects.

2. Translation – To translate text or speech into another language, tools are designed using Deep Learning (DL).

3. Chatbot – When you ask customer care a question, who do you think answers? Chatbots answer questions like humans do. That happens because of DL.

4. Robotics – Robots use deep learning to notice the environment and act the right way.

5. Medical Field – The healthcare sector also uses Deep Learning. They use them to detect cancer cells in humans. Finding the area of cancer cells is one example of deep learning, and it has many other uses.

Now, let’s see the skills required for DL.

Skills Required for Deep Learning

DL is becoming popular day by day. Understanding DL and ML can be rewarding.

As various fields are adopting DL. So the knowledge of DL is important.

The following six skills are essential for learning Deep Learning –

1. Maths Skills.

2. Programming Skills.

3. Data Engineering Skills.

4. Machine Learning Knowledge.

5. Knowledge of DL Algorithms.

6. Knowledge of DL Frameworks.

Here, I will not go into detail about these skills.

But,

If you want to know more about the important skills needed for Deep Learning, read this article: Important Skills Required for Deep Learning Success. This can help you understand how and where to start learning DL.

Now, it’s time to wrap up.

Conclusion

After reading this, your basics of Deep Learning should be clear. Deep Learning is a powerful technique applied in Machine Learning. Its scope is very broad and bright.

In the upcoming year, we will see more interesting inventions in deep learning.

Therefore, it’s not the end. It’s the beginning of the Deep Learning Era.

I hope you found this article helpful.

Thanks for reading.

Enjoy Learning.

FAQ

1. What exactly is Deep Learning?

Deep Learning is a technique of Machine Learning. It functions in a way that is similar to how the human brain works. It uses an Artificial Neural Network. An Artificial Neural Network has three layers: an input layer, a hidden layer, and an output layer.

2. What are Deep Learning Examples?

Almost every industry now uses deep learning. For example, Self-Driven Cars, Robots, Chatbots, and Alexa are a few of them.

3. What is deep learning vs Machine Learning?

DL works perfectly on a large dataset. Whereas Machine Learning can’t. DL extracts features automatically. Whereas Machine Learning does it manually. DL can handle complex real-world applications, but machine learning has limitations.

4. Is Deep Learning important?

It depends on the application and nature of the problem but in general, the answer is yes. If you have a big data set, then DL is preferable.

5. Is CNN deep learning?

CNN is an algorithm or a class of DL. CNN is used for image recognition. It is a very powerful algorithm in DL.

6. Who invented Deep Learning?

Igor Aizenberg was the first to use the term “Deep Learning”. Geoffrey Hilton is recognized as the founder of Artificial Neural Networks. And Yann Lecun is the father of CNN.

7. Why is it called deep learning?

Because of its structure. An Artificial Neural Network has three layers. There may be a thousand hidden layers. A deeper network works more accurately. That’s why it is called Deep Learning.

8. What is ReLU in Deep Learning?

ReLU is Rectifier Linear Unit. ReLU is a type of activation function. This is the most commonly used activation function. ReLU is mostly applied in Hidden Layers.

9. Is CNN an Algorithm?

Yes. CNN is a DL algorithm. That is used for image recognition.

10. What are Deep Learning Algorithms?

Here is a list of the top top-most used DL Algorithms:

1. Feedforward Neural Network.

2. Backpropagation.

3. Convolutional Neural Network.

4. Recurrent Neural Network.

5. Generative Adversarial Networks (GAN).

Stay Ahead in Data Science and DL

Unlock free tutorials, expert guides, and resources on data science, machine learning, and Python — delivered straight to your inbox. (No spam. Unsubscribe anytime.)

✅ Join 50k+ learners | 🔒 We respect your privacy.

You have successfully joined our subscriber list.

Leave a Reply